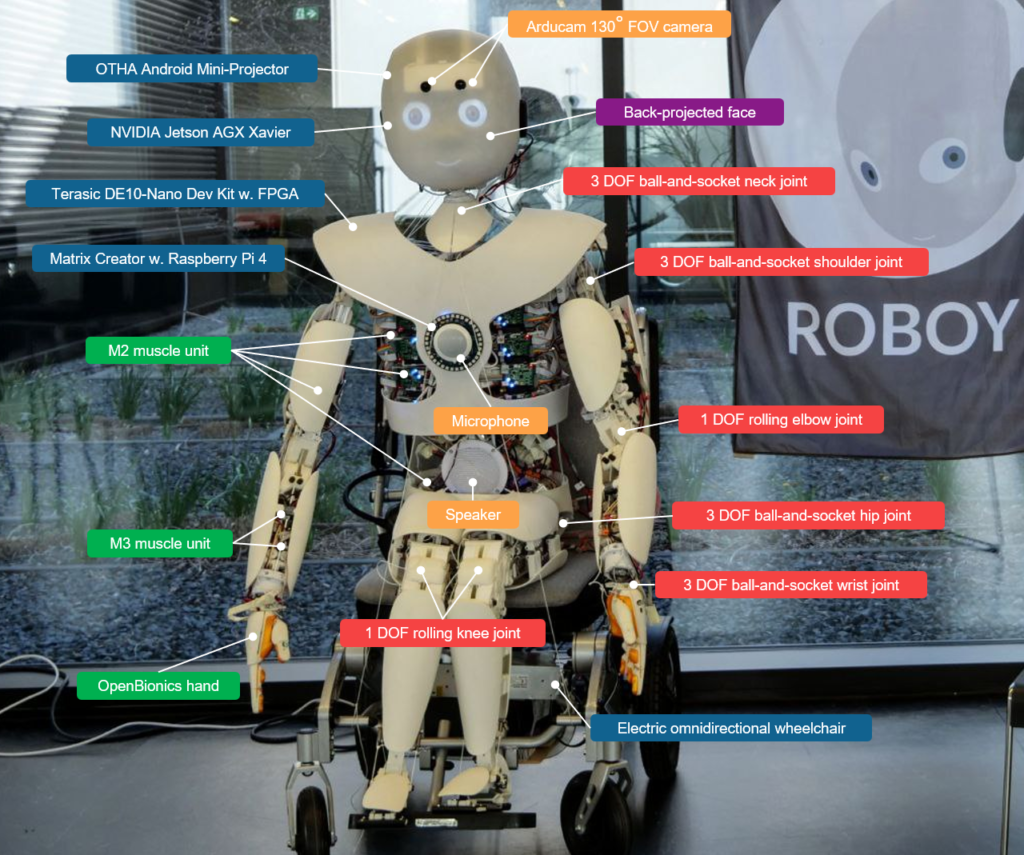

Roboy 3.0

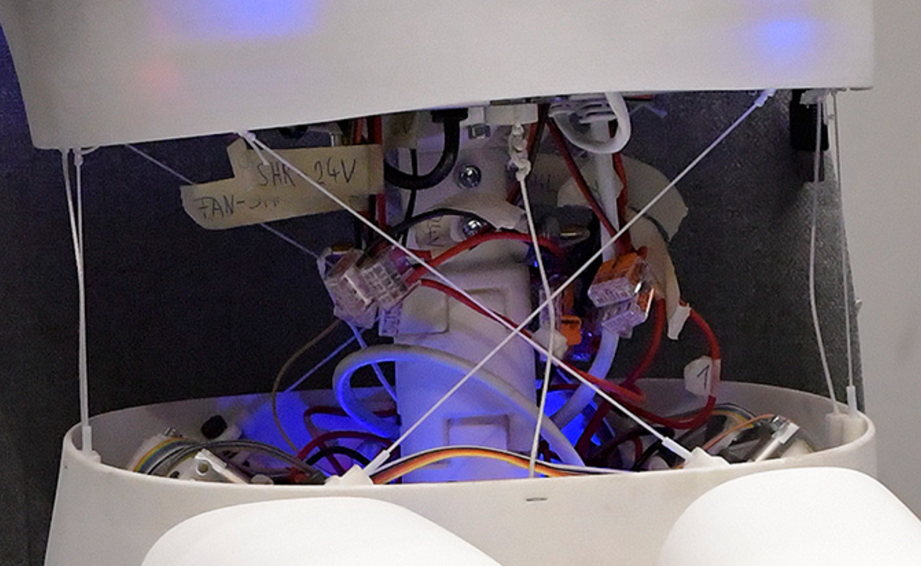

Roboy 3.0 is our third generation musculoskeletal tendon-driven humanoid robot.

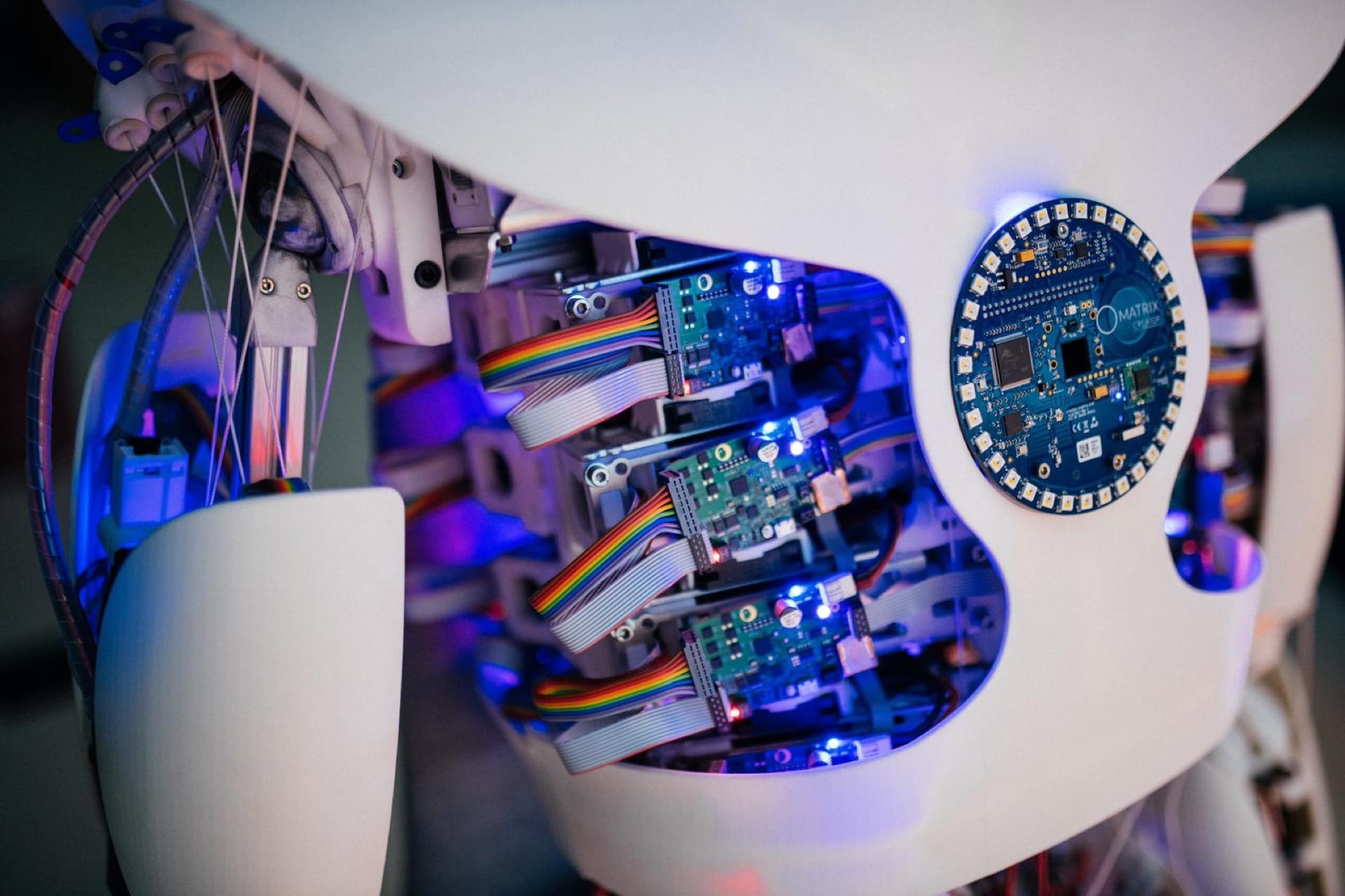

Actuation

Roboy’s actuation key element is a muscle unit – a series elastic actuator fully developed in-house. Roboy’s movement is based on the antagonistic-protagonistic muscle activation. The muscle units come in 2 types: M2 and M3. M2 muscle units are more powerful and actuate the neck, shoulders, elbows and torso. M3 muscle units have a smaller form factor and actuate the wrists.

Muscle Units

M2 muscle unit

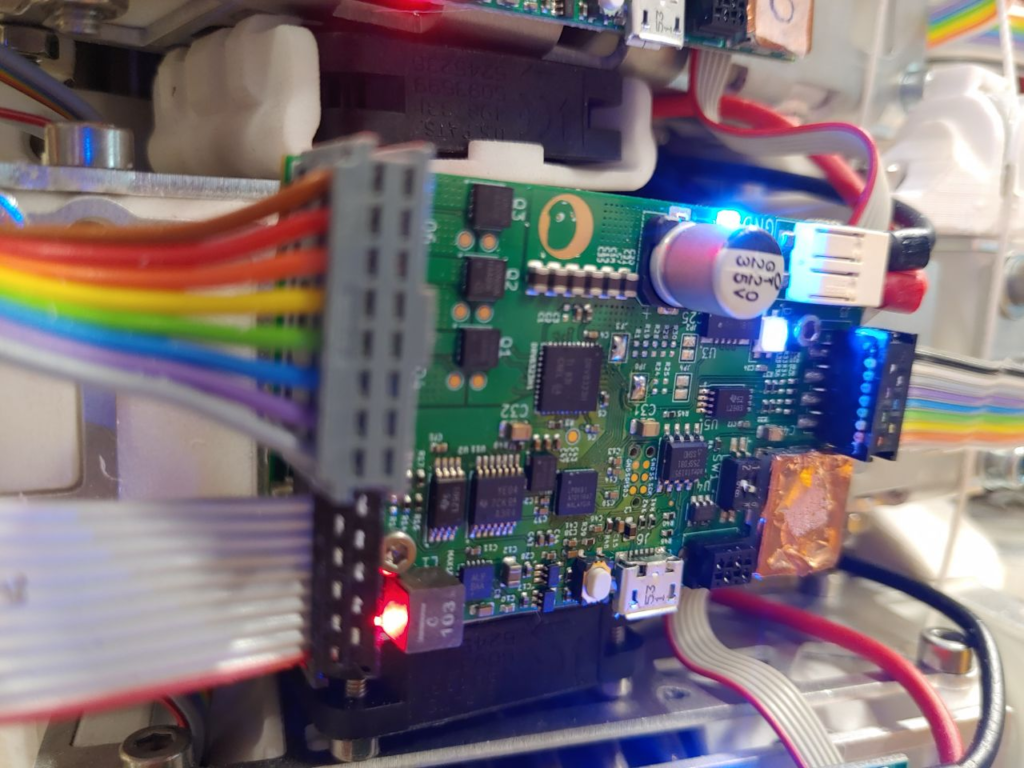

series elastic actuator consisting of a BLDC motor surrounded by a torsional spring that pulls on a dyneema tendon and a force sensor, generating up to 300N of tendon force; control modes: PWM, position and force; custom motorboard with tinyFPGA and icebus interface

M3 muscle unit

series elastic actuator consisting of a servo connected to a string that pulls on a dyneema tendon; control modes: PWM, position and spring displacement; icebus interface

Joints Overview

| Neck |  | 3 DOF joint controlled by six M2s, joint position via an array of 3D magnetic sensors |

| Elbow |  | 1 DOF rolling joint controlled by two M2s, joint position via magnetic angle sensor |

| Shoulder |  | 3 DOF joint controlled by eight M2s, joint position via an array of 3D magnetic sensors |

| Hand |  | 9 DOF in fingers controlled by four linear motors, no feedback |

| Spine |  | bendable bar, controlled by four M2s, no feedback |

Control

Roboy 3.0 is a ROS1-based robot (melodic upward).

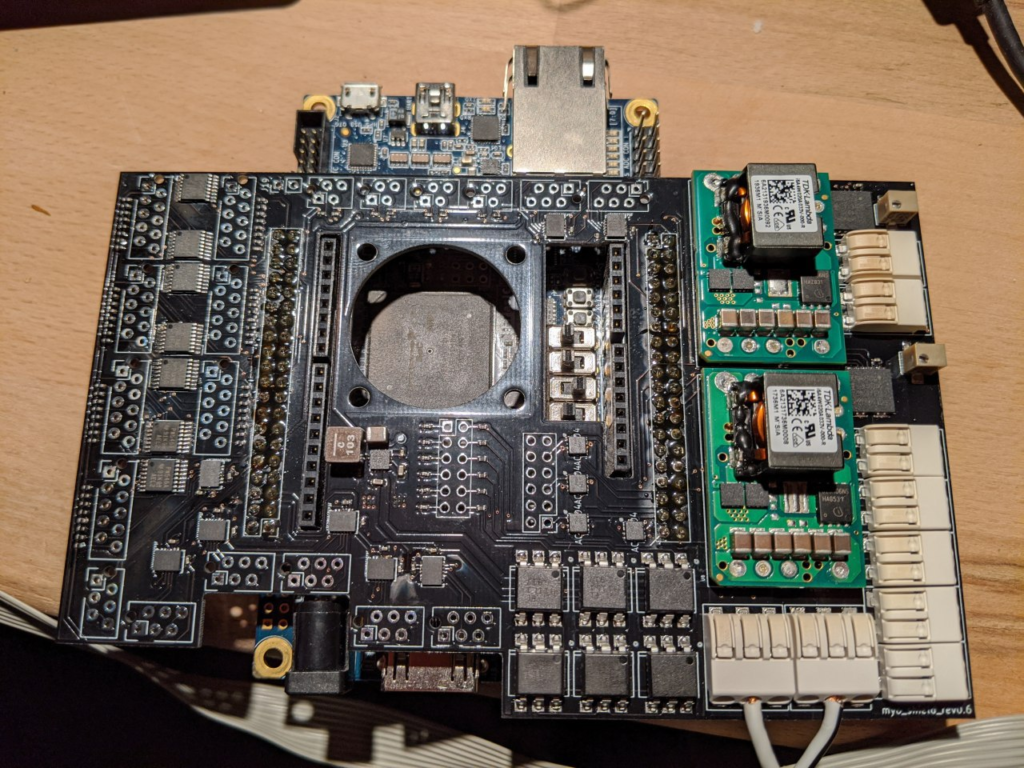

Low-level Motor Control

| A central compute unit for the low-level motor control is a Terasic DE10-Nano Dev kit w. Cyclone V SoC FPGA. Its arm core runs Ubuntu 16.04 and hosts the roboy_plexus ROS node, whereas its FPGA communicates to all motors via IceBus, a custom RS485 based bus protocol, as well as collects sensor data via I2C bus. This unit communicates via RJ45 LAN to the rest of the system. |

| Each IceBus line can have up to 8 muscle M2 units connected. Each muscle unit is equipped with a custom motor driver board. Each board has an FPGA that implements the motor PID controller and reads out encoders values (motor position and torsional spring rotation). Motor control modes available:positionPWMforce (in development) |

Joint-level Control

Joint-level control of Roboy 3.0 is based on CARDSflow – an open-source framework for design, simulation and control of cable-driven robots developed jointly by the lab of Prof. Darwin Lau at CUHK and Devanthro.

CARDSflow calculates the required tendon length to reach a desired joint angle. RVIZ and RQT plugins are available.

Inverse Kinematics

While CARDSflow implements inverse kinematics as well, currently Roboy 3.0 is using PyBullet for inverse kinematics calculations due to superior performance. PyBullet serves as a great simulation environment as well.

Telepresence

Devanthro has also developed a teleoperation solution to control Roboy 3.0. It allows low-latency streaming of various modalities between the operator and the robot, such as:

- bi-directional audio (30 ms latency)

- stereo camera stream (250 ms latency)

- gaze and end-effector control & navigation (50 ms latency)

The system works with Oculus Quest. Data transmission is based on Animus SDK developed by Cyberselves Ltd.

Demo

Full System

Gaze and end-effector control

Handshake

Roboy 3.0 is a soft & compliant system: In this video it can be clearly seen how the recipient safely shakes the robots hand, overriding the operators motion, thereby making the handshake natural for both recipient and operator.

Hug

Due to the compliant and safe nature of the robot, it exhibits only a minimal contact force to the recipient, just enough to feel the hug. The calibration is set so that the hands are maximally closed when the operator hugs herself, thereby giving her haptic feedback on the hug – giving both the recipient and the operator some feeling of being hugged. (The self-hug lacks the touch component on the back of the operator and as such is not fully congruent with the real experience of being hugged, it still conveys some haptic feedback component).

That’s our current technology stack …

or how you can experience it yourself!

The right robot for you to work with us? Let’s talk!